Let’s say that you want to know if the number of your visitors are related to how much you spend on media. The number of visitors and media spend are both data attributes. If we want to understand the relationship between these two data attributes (beyond just eyeballing them), we have to understand if they covary. That means that when one variable moves from its mean, we would expect that a related variables would change in a similar way. This is the correlation between data attributes.

In this post I will walk through what covariance is, how it is calculated and use R to test for it. This is a very valuable technique to determine relationships between variables and it is not difficult to apply.

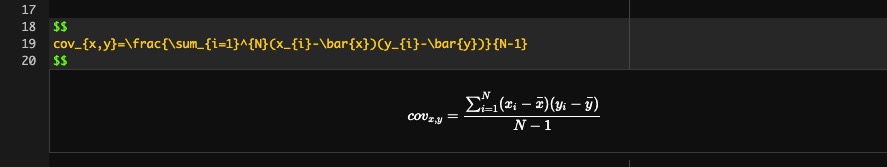

Covariance Equation

For example, let’s say that we think that the more emails we send to a subscriber, the higher the conversion rate will be and we want to know if these two are really correlated. We look at some of the data and we find that some of those subscribers that received more emails, converted at a higher rate than those that received less. It appears that these two variables are related. We could feel a little more confident if we could use some kind of calculation. Luckily, there is one – a covariance equation:

\[

cov_{x,y}=\frac{\sum_{i=1}^{N}(x_{i}-\bar{x})(y_{i}-\bar{y})}{N-1}

\]

This equation may look daunting, but it basically takes the deviations in one variable and multiplies it by the deviations in the other variable. That is the top part of the equation and is called the cross-product deviations. Those are then added together and multiplied by how many subscribers are in the sample (minus one – there is a good reason for this – check out this video for an explanation: http://bit.ly/2FU7PFm). If the covariance value is positive, then the two variables are, to some degree, positively related. If the covariance is negative, then the two variables are, to some degree, negatively related.

This is a good start in understanding correlation. However, there are issues with using the covariance equation. The most glaring is that it is not a standardized measurement. That means that the scale depends on the data.

Standardize the Covariance

To move beyond the covariance equation, we need to standardize the covariance. The best way to do that is to use the standard deviation. If we divide the distances from the mean by the standard deviation, we get a standardized measurement that we can use to compare different sets of data. This is called the correlation coefficient:

\[\rho = \frac{\text{cov}(X,Y)}{\sigma_x \sigma_y}\]

By using this equation (which is known as the Pearson correlation coefficient), you will have a value between -1 and 1. A -1 would indicate a perfectly negative relationship, while a 1 would indicate a perfectly positive relationship.

From here, we can test probabilities by transforming \(r\) into a \(z\)-score. We do that by first transforming \(r\), because the Pearson correlation coefficient does not have a normal distribution. Take \(\frac{1}{2}\) the natural log of \(1+r\) divided by the natural log of \(1-r\).

Z-Score

To transform the result into a \(z\)-score, divide it by the standard error (\({\mathop{\rm var}} \left( {\bar x} \right) = \frac{{\sigma ^2 }}{n}\)). The standard error is \(1\div(\sqrt{N-3})\).

Another way to test \(r\) is to use the \(t\)-statistic instead of a \(z\)-score. To get the \(t\)-statistic, multiple \(r\) by \(\sqrt{N-2}\). Divide the result by \(\sqrt{1-r^2}\).

This is a basic explanation of how to correlate data attributes. To get into more practical examples, we’ll use R and a couple of different types of correlation calculations.

Main Types of Correlation

There are three main types of correlation:

- Pearson

- Spearman

- Kendall

I am going to create some data and then show how r can be used with each type of correlation.

Pearson

Pearson can only be used with parametric data. If the data is not parametric, one of the other two can be used. Another option is to use bootstrapping to force the data into a parametric form.

I’m going to use the mtcars data to show how to use r for correlation:

str(mtcars)

## 'data.frame': 32 obs. of 11 variables: ## $ mpg : num 21 21 22.8 21.4 18.7 18.1 14.3 24.4 22.8 19.2 ... ## $ cyl : num 6 6 4 6 8 6 8 4 4 6 ... ## $ disp: num 160 160 108 258 360 ... ## $ hp : num 110 110 93 110 175 105 245 62 95 123 ... ## $ drat: num 3.9 3.9 3.85 3.08 3.15 2.76 3.21 3.69 3.92 3.92 ... ## $ wt : num 2.62 2.88 2.32 3.21 3.44 ... ## $ qsec: num 16.5 17 18.6 19.4 17 ... ## $ vs : num 0 0 1 1 0 1 0 1 1 1 ... ## $ am : num 1 1 1 0 0 0 0 0 0 0 ... ## $ gear: num 4 4 4 3 3 3 3 4 4 4 ... ## $ carb: num 4 4 1 1 2 1 4 2 2 4 ...

Let’s say we want to know whether the number of cylinders is correlated with mpg. I’m going to use cor.test() to check:

cor.test(mtcars$cyl, mtcars$mpg, method = 'pearson', conf.level = 0.95)

## ## Pearson's product-moment correlation ## ## data: mtcars$cyl and mtcars$mpg ## t = -8.9197, df = 30, p-value = 6.113e-10 ## alternative hypothesis: true correlation is not equal to 0 ## 95 percent confidence interval: ## -0.9257694 -0.7163171 ## sample estimates: ## cor ## -0.852162

The output shows a a correlation of -0.85, which indicates that as the number of cylinders goes up, mpg goes down. Intuitively, that makes sense. That correlation number also indicates a large effect and the p-value indicates statistical significance.

Spearman

The advantage of Spearman’s rho is it is non-parametric. It first ranks the data, then uses Pearson’s equation. Spearman’s rho is not a good model if the dataset is small with a large number of tied ranks (many of the numbers are the same). It’s better to use Kendall’s tau instead.

I’m going to use the same data from above for this example and the next, just to show the different outputs. In the next two, I’m going to add exact = FALSE, which just suppresses a few warnings that pop-up that we’re not going to worry about right now.

cor.test(mtcars$cyl, mtcars$mpg, method = 'spearman', exact = FALSE, conf.level = 0.95)

## ## Spearman's rank correlation rho ## ## data: mtcars$cyl and mtcars$mpg ## S = 10425, p-value = 4.69e-13 ## alternative hypothesis: true rho is not equal to 0 ## sample estimates: ## rho ## -0.9108013

Kendall

Kendall’s tau is another non-parametric correlation function. It works best for small datasets with a large number of tied ranks (many of the numbers are the same), like mentioned above.

cor.test(mtcars$cyl, mtcars$mpg, method = 'kendall', exact = FALSE, conf.level = 0.95)

## ## Kendall's rank correlation tau ## ## data: mtcars$cyl and mtcars$mpg ## z = -5.5913, p-value = 2.254e-08 ## alternative hypothesis: true tau is not equal to 0 ## sample estimates: ## tau ## -0.7953134

Bootstrapping

Bootstrapping uses the formula for the correlation inside a function, then iterates through it the number of times that is set in the bootstrapping function, as R = number of samples. For this example, I’m going to switch to the cor() function:

library(boot)

bootKendall <- function(d, i){

cor(d$cyl[i], d$mpg[i], use = "complete.obs", method = "kendall")

}

bootcorr <- boot(data = mtcars, statistic = bootKendall, R = 1000)

bootcorr

## ## ORDINARY NONPARAMETRIC BOOTSTRAP ## ## ## Call: ## boot(data = mtcars, statistic = bootKendall, R = 1000) ## ## ## Bootstrap Statistics : ## original bias std. error ## t1* -0.7953134 -0.001265437 0.02881028

boot.ci(bootcorr, conf = 0.95)

## Warning in boot.ci(bootcorr, conf = 0.95): bootstrap variances needed for ## studentized intervals

## BOOTSTRAP CONFIDENCE INTERVAL CALCULATIONS ## Based on 1000 bootstrap replicates ## ## CALL : ## boot.ci(boot.out = bootcorr, conf = 0.95) ## ## Intervals : ## Level Normal Basic ## 95% (-0.8505, -0.7376 ) (-0.8622, -0.7472 ) ## ## Level Percentile BCa ## 95% (-0.8434, -0.7284 ) (-0.8359, -0.7055 ) ## Calculations and Intervals on Original Scale ## Some BCa intervals may be unstable

The output gives us the original Kendall’s tau value, plus the bias and standard error. Both of these numbers are small, so that shows that both the bias and error of the sample is not affecting the outcome too much.

Also, I have included the boot.ci – which gives us the confidence interval for our data. One of the things to look for is if the values cross zero. If they do, there could be issues between our sample data and the actual population that we are sampling from. In that case, we may need to reassess our data and draw a new sample.

Conclusion

Going back to the example in the beginning, now you can take the number of emails sent to a subscriber and the number of conversions of each subscriber during a given period, use a correlation function in r, like cor.test(), and have some solid statistical insights to make some informed decisions about email campaigns going forward.