Advanced SQL for analysts is different than advanced SQL for other tasks (like DBAs). I will be concentrating on SQL that will be useful to you as an analyst. This is not an exhaustive list of SQL functions and features, but ones that I have found useful in my work as an analyst.

What is SQL?

SQL is the language used to communicate to relational database management systems (RDBMS). E.F. Codd wrote a paper in 1970 describing a completely different way of structuring data. Up till then, data was generally organized in a hierarchical file structure. A lot of code was needed to work with the stored data. Codd’s paper described a model of storing data as relationships between database objects and their real-world counterparts. This model would lead to a simplified the data retrieval process.

Beyond what we are interested in as Analysts, was the idea of separating the physical storage from the data model. Codd was already thinking of OOP (object-orientation programming) before it became a thing. That’s not to say that it’s been perfectly implemented in the platforms we have today, it hasn’t. Most RDBMS still have a 1-to-1 relationship between the data and physical storage (although that cannot be said of GCP & AWS) as well as issues with SQL.

If you find SQL limiting, you’re not alone. Chris Date, in his textbook An Introduction to Database Systems, goes as far as stating “it is not at all clear that today’s SQL products really deserve the label “relational” at all!“, which was aimed at both the current RDBMS as well as SQL. In fact, throughout his book he uses his own theoretical language, Tutorial D, since he finds SQL falls short in too many areas.

SQL as a Programming Language

Personally, I like SQL just fine. I find myself writing a lot of SQL, as well as a lot of R. As an Analyst, I find the two work together very well. I think that SQL as a language is not that difficult to understand. Rather, it is how to write it to retrieve the data you want that is more challenging. It takes imagination to write SQL in an efficient way that doesn’t over-burden the database (and I’m not even addressing the proper use of indexes here).

SQL is not like other programming languages. It should be written in concise statements. Loops, for example, should be avoided because of the load that they tend to put on the system. Also, SQL was written to be a set-based language, so loops are not easily optimized. Personally, I’ve also had issues with branches inside of stored procedures. The execution plan has to be rewritten on every execution because of different SQL statements executing within the stored procedures. A better option, in that case, would be to have a separate stored procedure for each SQL statement that retrieves data from different tables.

SQL Composition & Commands

SQL is composed of set of commands that can be roughly divided into sub-languages:

- Data Query Language (DQL) – Used to perform queries and retrieve data (what we’re mostly interested in).

- Data Definition Language (DDL) – Language for the creation of database objects (tables/indexes/relations, etc.).

- Data Control Language (DCL) – Used to allow access to data (authorization).

- Data Manipulation Language (DML) – Language used to add/update/delete data.

The SELECT command is the command most used by Analysts to retrieve data. A basic query would look something like this:

SELECT col_1, col_2 FROM table_a WHERE col_3 > 35

If we wanted to group data together, we could do it by using a GROUP BY command:

SELECT col_1, SUM(col_2) as sum_col_2 FROM table_a WHERE col_3 > 35 GROUP BY col_1 HAVING SUM(col_2) < 1000

I have grouped by col_1, and like the previous example, I have limited the data to only those rows where col_3 is less than 35. I have added another command HAVING, which is like a WHERE but for after the grouping.

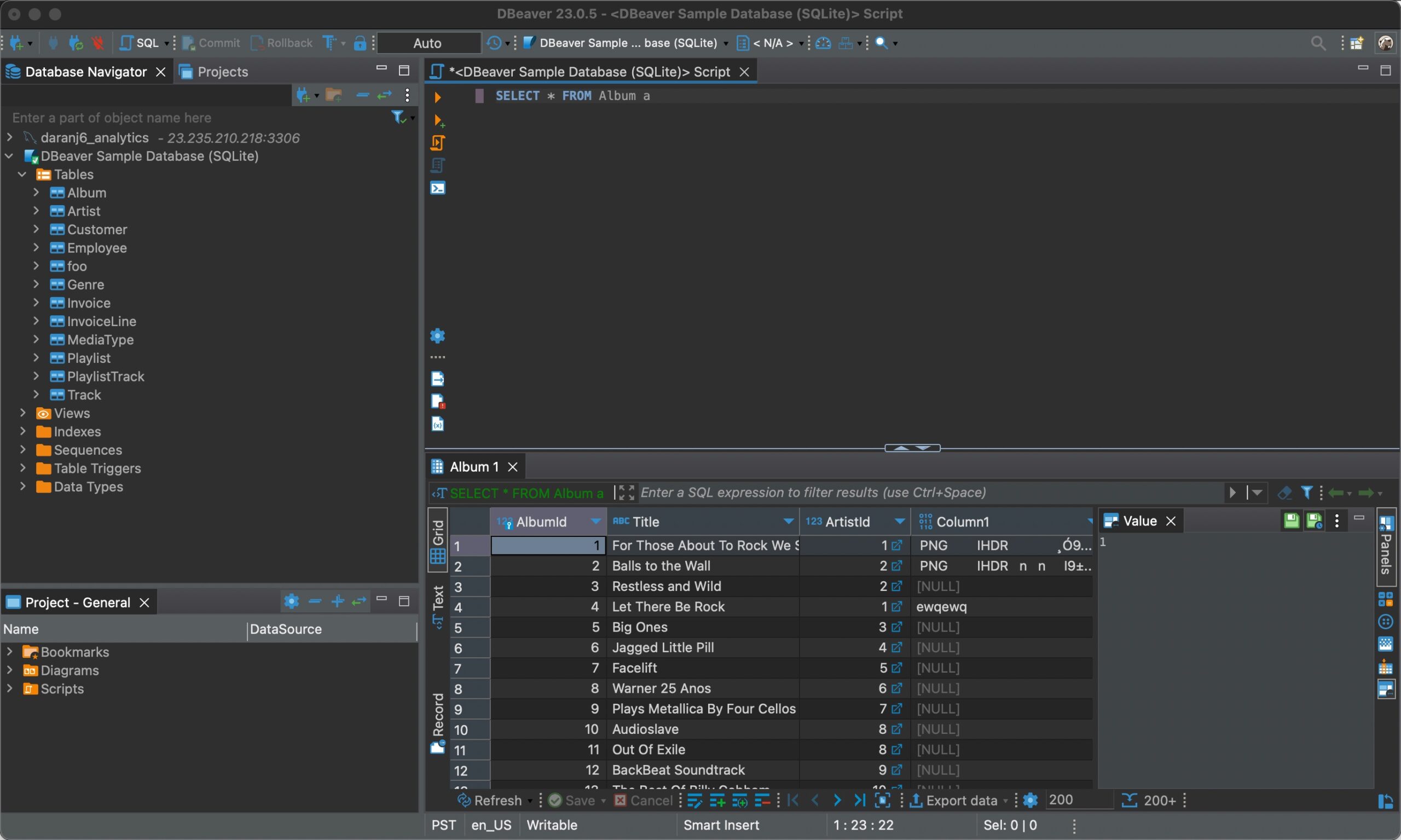

Database Basics

Those are just some basic commands for using SQL. As an Analyst, there is a lot of stuff you don’t usually have to be concerned about (but should be aware of), like the following:

- Database Design – How the tables (or relvars, as Chris Date calls them – relational variables) and columns (attributes) are related to each other. To be most effective, tables should follow normal forms (definitely these: 1NF – BCNF) to avoid decompositional loss and repetitive data storage.

- Referential Integrity – Enforcement of joins between tables to ensure there are no orphaned records. It can also reduce query time. When there is little to no referential integrity, I would recommend double checking all the data for issues between tables.

- Indexes – Shortcut to retrieve data, like a library catalogue. Ranges of data, like dates, can be used in clustered indexes so they are physically close as well. Indexes can help avoid full table scans and can significantly decrease data retrieval time.

- Data Types – The type of data you are retrieving. Sometimes you will need to know this (like retrieving a column of JSON data).

- Locking/Blocking Issues – Sometimes a query can lock parts or all of a table. Generally these issues happen when modifying the data in the table. Blocking is much more likely in a database where there is little to no referential integrity.

Temporary Data Storage Mechanisms

There are a few different ways to temporarily store data that you are working with. Why would you want to do that? Well, you may need to summarize a table before joining it to another table. You will need something to hold that summarized data. Here are a few options:

- Derived Table

- Temp Table

- Table Variable

- Common Table Expression (CTE)

In the past, I was a SQL Server DBA as well as a developer and temp tables could be a huge bottleneck (table variables and CTEs didn’t exist back then). That is not always the case these days. You can use a table variable or a SCHEMA_ONLY memory-optimized table variable. As a matter of fact, in MySQL 5.6 or earlier, a derived table would have been stored as a temp table anyway. However, later releases of MySQL do not act that way, instead they are likely to merge derived tables into the outer query block.

CTEs

I haven’t used table variables or CTEs much. I found them to be somewhere between a derived table and a temp table and I didn’t warm to them. However, I think they can have the same impact as the other temporary data storage mechanisms. For an Analyst, none of these are necessarily right or wrong, more a matter of preference. If you are creating code for a production database, there are greater concerns over query cost and maintenance.

Derived Table Code

Here is an example of a derived table. In this case, it is used to summarize some data before adding it to another set of data:

SELECT ta.col_1, sum_col_3, SUM(ta.col_2) as sum_col_2

FROM table_a ta INNER JOIN

(SELECT col_2, sum(col_3) as sum_col_3

FROM table_b

WHERE col_2 != 10

GROUP BY col_2) tb

on ta.col_1 = tb.col2

WHERE col_3 > 35

GROUP BY col_1,

sum_col_3

HAVING SUM(col_2) < 1000

In this statement I have added table aliases (the ta and tb – a derived table must have an alias) and the derived table is linked with an inner join on table_a and table_b by table_a.col_1 and table_b.col_2 and returning the sum of col_3 from table_b where the count of col_4 is not equal (!=) to 10. Then I am only retrieving rows where table_a.col_3 is greater than 35 and grouping all by table_a.col_1 and table_b.sum_col_3 that have a sum of table_a.col_2 less than 1000…this is starting to get complicated.

Temp Table Code

Here’s what the above would look like using a temp table. First, you need to check if the temp table exists and drop it if it does. Then you need to create the temp table and input the data into it. Finally, you need to join the data together and return the data to the caller.

DROP TEMPORARY TABLE IF EXISTS temp_table_b;

CREATE TEMPORARY TABLE temp_table_b

SELECT col_2,

sum(col_3) as sum_col_3

FROM table_b

WHERE count(col_4) != 10;

SELECT ta.col_1, tb.sum_col_3, SUM(ta.col_2) as sum_col_2

FROM table_a ta INNER JOIN

temp_table_b tb on ta.col_1 = tb.col2

WHERE ta.col_3 > 35

GROUP BY ta.col_1,

tb.sum_col_3

HAVING SUM(ta.col_2) < 1000;

So you have sixteen lines of code instead of eleven. But for some, this is more readable. There’s one more caveat, since it’s a temp table, it could be dropped sooner than you would like – it’s not permanent – and if this is part of a larger, more complex series of SQL statements, it could be more challenging to work with. But, as far as performance, they are likely similar.

Embedding SQL

If you are embedding your SQL in JavaScript, R or other programming language, I would recommend keeping your SQL code together (easier to update than writing a bunch of strings interspersed throughout your code and concatenating them together) and using it only to get the data that you need. Then use JavaScript, R or other programming language to do any looping or transformations. Don’t make the mistake of using SQL like you would other programming languages either – think “set-based”.

If performance is a concern, you will first need to check the performance difference between writing a temp table, derived table, etc. But as an Analyst, you may be somewhat more concerned about compact code. Having to write a number of statements can be more difficult to maintain than a number of derived tables (depending on where these statements reside). The derived tables will make your statement longer, but overall, more compact. Temp tables, table variables or CTEs may be easier to understand.

EXPLAIN ANALYZE

In MySQL, you can use EXPLAIN ANALYZE to examine the types of joins the query optimizer is using (hash, nested loop, sort merge). This demonstrates the difference between the joins you write in SQL and the joins the query optimizer uses (you can use hints in your query to push the query optimizer to use a particular join, or index, but I would be weary of doing that unless you really know what you are doing). If you construct your query differently, it may use a different and more efficient join. The EXPLAIN ANALZE also includes the execution cost of your SQL statement, so you can see which version of your query is quicker. You would include EXPLAIN ANALZE like this:

EXPLAIN ANALYZE SELECT ta.col_1, sum_col_3, SUM(ta.col_2) as sum_col_2

FROM table_a ta INNER JOIN

(SELECT col_2, sum(col_3) as sum_col_3

FROM table_b

WHERE col_2 != 10

GROUP BY col_2) tb

on ta.col_1 = tb.col2

WHERE col_3 > 35

GROUP BY col_1,

sum_col_3

HAVING SUM(col_2) < 1000

SHOW (FULL) PROCESSLIST/KILL QUERY

If you are finding that your queries are taking an unusually long time to finish, you might want to check the processes that are running. In MySQL, you can use SHOW FULL PROCESSLIST (the equivalent in SQL Server is sp_who) to list all of your processes running (if you have PROCESS privileges, then you can see all running processes). Sometimes there is a process running that you started that you were not aware was still running. If that is the case and you want it to stop, you can use KILL QUERY followed by the PROCESSLIST Id. That will kill the query without killing the connection.

Optimizing Subqueries

Subqueries are different from derived tables in that they are not representing a table. They are usually used in the WHERE clause of a SQL statement. An example would be:

SELECT col_1, col_2 FROM table_a WHERE col_3 IN (SELECT col_6 FROM table_d WHERE col_1 = 23)

As you can see, the subquery does not need an alias (unlike a derived table). In this statement, only the data from table_a where col_3 is IN col_6 from table_d is returned. We can turn this into a correlated subquery by adding a condition from the outer query into the inner query, like this:

SELECT col_1, col_2 FROM table_a WHERE col_3 IN (SELECT col_6 FROM table_d WHERE col_1 = 23 and table_a.col_3 = col_6)

We would do this only if it helped improve performance of the query or the subquery was more complex and there were other reasons that it needed to be correlated to the outer query. Otherwise, it would just make the query appear more complicated. You can use EXPLAIN ANALZE to check performance.

Another type of subquery statement uses EXISTS instead of IN. We could rewrite the above like so:

SELECT col_1, col_2 FROM table_a WHERE EXISTS (SELECT col_6 FROM table_d WHERE col_1 = 23 and table_a.col_3 = col_6)

Again, you can use EXPLAIN ANALZE to compare performance.

Accessing MySQL Meta Data

Something else that I find helpful when writing queries is accessing the metadata. In MySQL, the metadata is stored in the INFORMATION_SCHEMA, which is a database in each MySQL instance. This is a table that can only be queried; no updates, inserts or deletes can be performed. The column column_comment in the columns table and table_comment in the tables table can be very useful, if it is actively used in your instance. Here is an example that will retrieve the database name, the names of the tables and columns, the column data type, the name of the column index key and the comments:

select t.table_schema

,t.table_name

,t.table_rows

,t.table_comment

,c.column_name

,c.column_type

,c.column_key

,c.column_comment

from information_schema.columns c

join information_schema.tables t on c.table_name = t.table_name

Working With JSON in MySQL

JSON is an open standard file format that stores data as a text file. If you are wondering what the difference is between JSON and XML, here are a few:

- XML supports displaying data because it is a markup language, JSON does not.

- In JSON, data is easier to access and can maintain data type (string, number, array, boolean, etc.), whereas in XML, everything is a string.

- There is native support for JSON and it has a type, XML is typeless and is not supported natively – only by conventions.

JSON Example

In MySQL, JSON can be stored in a text field and accessed as JSON (not just as text) or as a JSON datatype (since version 5.7.8). Here is an example of what JSON looks like:

{

"user": [

{

"firstName": "Julie",

"lastName": "Jones",

"address": {

"streetAddress": "111 Main St.",

"city": "Los Angeles",

"state": "CA",

"postalCode": "90505"

},

"firstName": "Mick",

"lastName": "Haberman",

"address": {

"streetAddress": "42 N. Sutter St.",

"city": "NY",

"state": "NY",

"postalCode": "10027"

}

]

}

To access the data inside JSON in MySQL, you will need to use some new statements. The challenge is that there can be multiple values within a single row (called a tuple in the relational model, derived from mathematics). For example, the above JSON holds two sets of data: one for Julie Jones and another for Mick Haberman. This, and more, can be stored in a single row in MySQL. Honestly, I can write a separate post on querying JSON.

Extracting JSON Data Elements

If you wanted to just extract the first name, that would work something like this, using the column-path operator ->:

SELECT col->$.firstName from table_a

Which would return "Julie", "Mick". You can also use the inline path operator ->>, with the difference being that the column-path operator will retrieve the quotes, escape characters, etc. and the inline path operator won’t include those, so you’re just getting the value inside the key data.

SELECT col->>$.firstName from table_a

Which would return Julie, Mick. There is a lot more to JSON and how to work with it in MySQL. However, I prefer to work with JSON in R, when possible. Some of the issues around JSON in databases is the lack of indexing and the size of JSON fields (they can get very large), which means very slow retrieval times and high overhead. I would prefer to parse JSON into proper relational tables as simpler datatypes.

CASE and UNION Statements

The last two advanced SQL examples are two functions that I have found very helpful. The first one is the CASE statement. This is like an IF statement but for multiple options. Without the CASE statement, you would need a number of nested IF statements that would be harder to write and maintain. Here’s a straight forward example:

SELECT col_1,

CASE WHEN col_2 = 1 THEN "orange"

WHEN col_2 = 2 THEN "green"

WHEN col_2 = 3 THEN "blue"

ELSE "other" END AS col_2_color

FROM table_a

WHERE col_3 > 35

Another useful SQL function is the UNION (ALL). You can use UNION to join all the unique rows of two tables or UNION ALL (similar to R’s rbind()) to join all rows. The number of rows must be the same and the returned dataset will have the topmost query’s names for the columns. Here is an example of how to use UNION:

SELECT 'table_a' AS table_source,

col_1,

CASE WHEN col_2 = 1 THEN "orange"

WHEN col_2 = 2 THEN "green"

WHEN col_2 = 3 THEN "blue"

ELSE "other" END AS col_2_color

FROM table_a

WHERE col_3 > 35

UNION

SELECT 'table_f' AS table_source,

col_5,

CASE WHEN col_7 = 6 THEN "orange"

WHEN col_7 = 8 THEN "green"

WHEN col_7 = 32 THEN "blue"

ELSE "other" END AS col_7_color

FROM table_f

WHERE col_9 != 0

I have left the CASE function in as well as added a column that is just a string to id the data source. I have also added a WHERE clause for col_9 not equal != to 0.

Both CASE and UNION can be very helpful when dealing with complex data (or messed-up data – you can use your imagination what could be happening in the example above, it’s not real, but I’ve dealt with similar challenges).

Conclusion

This post is certainly not definitive, but hopefully gives you more ideas as to how to write your SQL and demonstrate that there are a number of “correct” ways to write SQL. I focused on SELECT statements, since Analysts are usually retrieving data, not inserting/deleting/updating data. And for that, maintenance and performance is not as critical as it would be as part of production database code (and generally it’s bad practice to be querying a production database directly – there should be a separate reporting database of some kind). Still, I think it’s important to be aware of how your code taxes the database, how easy/difficult it would be to maintain and if there may be a way to write a query that is faster and simpler.

A Note on Data Retrieval

Apart from good SQL code, there are two things that may make accessing and retrieving data better – indexes and schema changes. The first one is easier to implement. Using the correct indexes on tables that you query cam make the difference between a query taking under a second or over five minutes.

The second is far more difficult and requires DBA and Database Developer support. Sometimes the data is not arraigned in a way that is suitable for good analysis. For example, a transactional database is designed for fast transactions, not for selecting and summarizing large sets of data. Flowing the transactional data into a new structure for reporting (think something like data warehouse, or an actual data warehouse) can make retrieving the data much more efficient and effective.

Hopefully this has been a a helpful post on how to write SQL beyond the simple SELECT statements. There is a lot more to SQL. If you want to learn more, check out these books by Itzik Ben-Gan: https://tsql.solidq.com/booksdisplay/. He is one of the best SQL programmers I know of. He concentrates on T-SQL (SQL Server’s flavor of SQL), but there is a lot of SQL techniques that can be used in any RDBMS.